Introduction

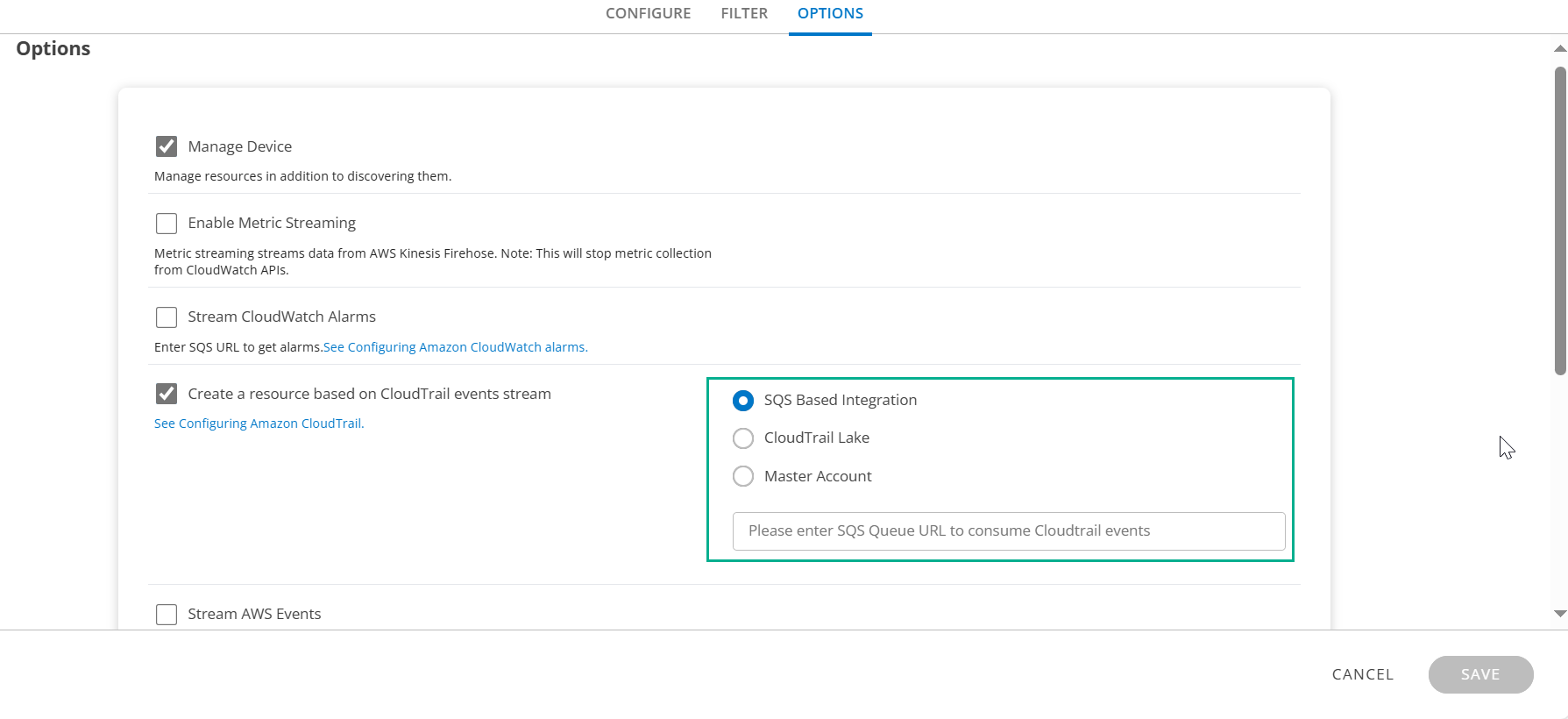

AWS CloudTrail generates events when there is a change such as launching or termination of an instance. Actions taken by a user, role, or an AWS service are recorded as events in CloudTrail. You can create resources in the platform by integrating with these CloudTrail event streams. The system supports below methods to ingest and process CloudTrail data:

- SQS-Based: Stream events from an Amazon SQS queue that is subscribed to a CloudTrail log delivery stream.

- CloudTrail Lake: Use AWS CloudTrail Lake to collect and query events in a centralized data store.

- Master Account: Consolidate events from multiple AWS accounts using a master (management) account.

Configure Amazon CloudTrail - SQS-Based

AWS CloudTrail generates events when there is a change such as launching or termination of an instance. Actions taken by a user, role, or an AWS service are recorded as events in CloudTrail. These events are captured through the CloudTrail SQS URL to create events.

Prerequisites

- Create an Amazon S3 bucket where all log files can be stored.

- Create an Amazon SNS topic.

- Create an Amazon SQS subscription and link it to the Amazon SNS topic.

Steps

- Log in to your AWS management console.

- Navigate to Amazon CloudTrail.

- On the Dashboard, click Create Trail. See AWS documentation on Creating a trail

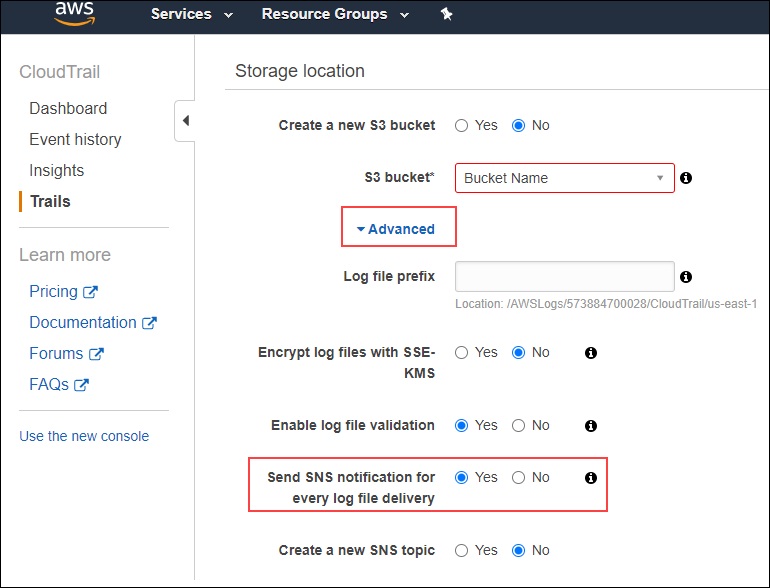

- While creating the trail, under Storage location, click Advanced.

- Set Send SNS notification for every log file delivery to Yes.

Select the Amazon SNS topic and click Create Trail.

The SNS topic should be unique to the trail created. Use the Amazon SQS URL associated with the Amazon SNS topic to configure Amazon CloudTrail while creating or updating the AWS integration in OpsRamp.

Amazon CloudTrail is now configured to send events to OpsRamp.

Configure Amazon CloudTrail - CloudTrail Lake

To capture and query AWS activity logs using CloudTrail Lake, follow these steps to set up an Event Data Store and ensure proper permissions.

Step 1: Create an Event Data Store

- Sign in to the AWS Management Console and open the CloudTrail console.

- From the navigation pane, under Lake, choose Event data stores.

- Choose Create event data store.

- On the Configure event data store page, in General details, enter a name for the event data store.

- Specify a retention period for the event data store.

Choose Next to configure the event data store.

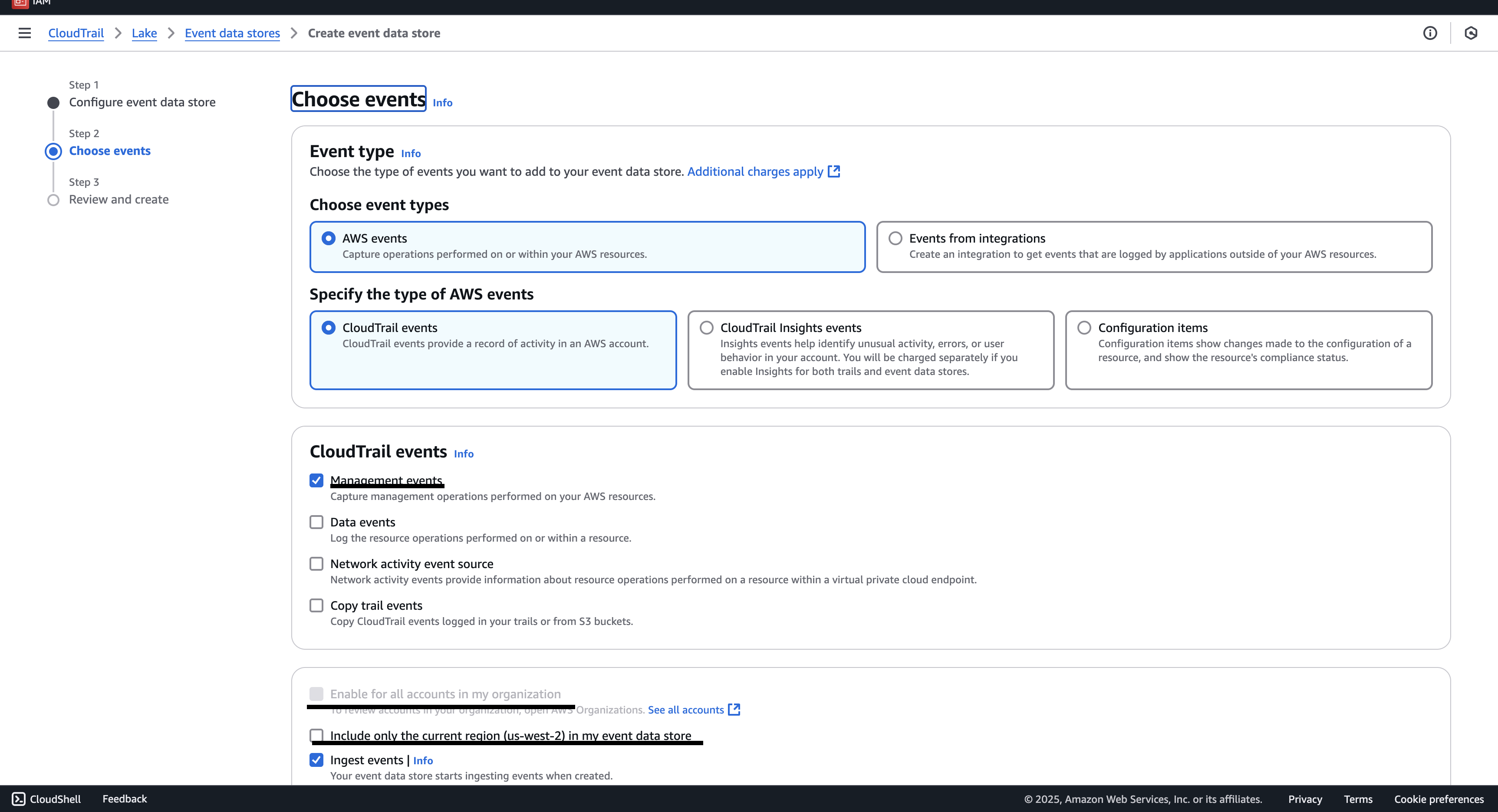

On the Choose events page, choose AWS events, and then choose CloudTrail events.

For CloudTrail events, choose at least one event type. By default, Management events is selected.

To have your event data store collect events from all accounts in an AWS Organizations organization, select Enable for all accounts in my organization.

Note

You must be signed in to the management account or delegated administrator account for the organization to create an event data store that collects events for an organization.To capture events from all regions, opt for the All Regions setting.

Choose Next to review your choices.

On the Review and create page, review your choices.

When you are ready to create the event data store, choose Create event data store.

After creating the event data store, open its details page and copy the ARN. You will need this ARN to configure CloudTrail Lake in the AWS integration within OpsRamp.

Step 2: Enable CloudTrail Lake in OpsRamp

- If you are using AWS Organizations, enable CloudTrail Lake at the organization level to automatically include events from all linked member accounts.

Note

- If the master account is not installed in the client environment, install it first, then configure the child accounts to receive events from the master configuration.

- For setups with a single master account and multiple clients (each with their own child accounts), add the master account to each client. The child accounts should then select the master account in their CloudTrail configuration.

- For standalone AWS accounts, repeat the setup steps above on each account individually to enable CloudTrail Lake.

Step 3: IAM Permissions for Query Access

Ensure that the AWS Identity and Access Management (IAM) role or user used by your application has the necessary permissions to query CloudTrail Lake. Required permissions may include:

{

"Effect": "Allow",

"Action": [

"cloudtrail:StartQuery",

"cloudtrail:GetQueryResults",

"cloudtrail:LookupEvents",

"lakeformation:GetDataAccess"

],

"Resource": "*"

}

Configure Amazon CloudTrail - Master Account

In environments where direct access to configure CloudTrail Lake across all individual AWS accounts is not available, you can designate one account as the Master Account to centralize event collection.

In this setup, CloudTrail Lake is configured only in a single AWS account (referred to as the Master Account), and all required CloudTrail events are streamed into this account. This eliminates the need to individually configure CloudTrail Lake in each account.

In the AWS Integration page, select the Master Account option and choose the configured master account from the dropdown list.

Benefits of the New CloudTrail Lake Integration Approach

The new CloudTrail Lake-based integration offers several advantages over the existing SQS-based method, particularly in multi-region and multi-account environments.

- Multi-Region Event Collection:

- Unlike the existing SQS-based approach, which is limited to a single region, the CloudTrail Lake method enables comprehensive multi-region event aggregation—improving visibility across your entire AWS footprint.

- Simplified Setup with CloudFormation:

- To streamline onboarding, a ready-to-use CloudFormation template will be provided:

- Automatically sets up CloudTrail Lake.

- Generates the required event datastore ARN.

- Reduces manual configuration steps.

- To streamline onboarding, a ready-to-use CloudFormation template will be provided:

- Flexible Account Support:

- Single Account Setup: Simply provide the generated datastore ARN to integrate CloudTrail Lake.

- Multi-Account (Master-Child) Setup:

- Set up CloudTrail Lake in the Master Account.

- Install the Master Account in the OpsRamp platform.

- Enable CloudTrail in Child Accounts without additional CloudTrail Lake configuration.

CloudTrail Lake Event Data Store (EDS) Best Practices

This section outlines recommendations for configuring AWS CloudTrail Lake Event Data Stores (EDS) for optimal performance, cost efficiency, and reliability. It applies to both standalone AWS accounts and large organizations with master-child structures containing hundreds of accounts. The document provides best practices for sizing, distributing, and optimizing Event Data Stores.

Key Recommendation: Limit each Event Data Store to 50 accounts.

AWS CloudTrail Lake Hard Limits

| Limit | Value | Impact |

|---|---|---|

| Concurrent Queries per Event Data Store | 10 (hard limit) | Maximum of 10 queries can run at the same time per EDS |

| StartQuery Rate (per account + region) | 3 TPS | Limits query initiation to 3 transactions per second |

| GetQueryResults Rate (per account + region) | 10 TPS | Limits result fetching to 10 transactions per second |

Reason for AWS recommendation of only 3 EDS per region:

- Rate limits are shared across all data stores in the same account and region.

- With 3 TPS for StartQuery, too many data stores cause contention.

Event Data Store Optimization

1. Deployment Scenarios

| Scenario | Accounts | Recommendation |

|---|---|---|

| Single Account | 1 | Create one Event Data Store (EDS) in the primary region. Ideal for standalone, development, or test environments. |

| Small Organization | 2–50 | Use a single EDS containing all accounts, deployed in one region. A 10-minute query frequency is sufficient. |

| Medium Organization | 51–200 | Use 2–4 EDS (around 50 accounts per EDS) distributed across multiple regions for better reliability and performance. |

| Large Organization | 200–500+ | Configure multiple EDS with a maximum of 50 accounts per EDS. Distribute across multiple regions. |

| Very Large Org | 500–1000+ | Maintain the 50 accounts per EDS ratio. Distribute across 4–6 regions for scalability and fault tolerance. |

2. Optimal Account Segregation Strategy

Recommended: Regional Distribution

Example Scenario:

- Master account managing 500 child accounts

- Currently: All accounts stored in one EDS

- Query frequency: Every 10 minutes

- Goal: Split into multiple EDS for better performance

Relevant AWS Hard Limits

| Limit | Value | Description |

|---|---|---|

| Concurrent Queries per EDS | 10 | Hard limit |

| Query Timeout | 5 minutes | Queries longer than 5 minutes terminate |

| StartQuery Rate | 3 TPS | Per account + region |

| GetQueryResults Rate | 10 TPS | Per account + region |

3. Recommended Configuration: 50 Accounts per Event Data Store

Why 50 Accounts Is Optimal

- Allows a 20% safety margin for:

- Query duration variability (2–5 minutes)

- Peak load periods

- Failed query retries

- Future growth

- Ensures balanced concurrency

- Keeps workloads well below AWS limits

- Supports reliable and resilient operations

Example Distribution

500 accounts ÷ 50 accounts per EDS = 10 EDS

4. Sizing Analysis: 50 vs 100 Accounts per EDS

| Metric | 50 Accounts | 100 Accounts |

|---|---|---|

| Queries per Hour | 300 | 600 |

| Avg Concurrent Queries | 5 (50%) | 10 (100%) |

| Peak Concurrent Queries | ~8 (80%) | >10 (overloaded) |

| Buffer for Spikes | 20% | 0% |

| Result | Safe/Scalable | High Risk of Overload |

Detailed Analysis

50 Accounts per EDS (Recommended):

- 300 queries/hour = 5 average concurrent queries (50% utilization)

- Peak concurrency: ~8 (80% utilization)

- Complies with AWS limits:

- StartQuery: 0.08 TPS (well under 3 TPS limit)

- GetQueryResults: 0.42 TPS (well under 10 TPS limit)

- Benefits:

- Safely handles spikes

- Supports retry logic

- 20% growth capacity

- Clean division into 10 stores

100 Accounts per EDS (Not Recommended):

- 600 queries/hour = 10 average concurrent queries (100% utilization)

- Peak concurrency exceeds 10, causing query queuing and delays

- Issues:

- No buffer for spikes

- Frequent limit breaches

- Higher failure rate

- No scalability headroom

5. Event Detection Latency (10-Minute Query Frequency)

CloudTrail ingestion latency:

- Best case (1–2 min): 80% of events

- Typical case (5–10 min): 15% of events

- Worst case (15–20 min): 5% of events (during AWS service delays)

Query cycle wait:

- Event just after a query: ~10 min delay

- Event just before a query: ~0 min delay

- Average: 5 minutes

Processing time:

- Query execution: 2–5 minutes (depends on event volume)

- Result processing: 1–5 minutes (depends on data size)

- Total: 3–10 minutes

Detection summary:

- 90% of events detected within 25 minutes

- 5% may be delayed up to 35 minutes (due to ingestion latency)

6. Recommended Implementation Example (500 Accounts)

| Region | Event Data Stores | Accounts | Purpose |

|---|---|---|---|

| us-east-1 | 3 | 150 | Primary region |

| us-west-2 | 3 | 150 | Fault tolerance |

| eu-west-1 | 2 | 100 | Europe coverage |

| ap-southeast-1 | 2 | 100 | Asia-Pacific coverage |

Total: 10 EDS covering 500 accounts.

7. Why Multi-Region Distribution?

Key Advantages:

- Distributes API rate limits across regions.

- Improves fault tolerance - regional failure doesn’t affect all accounts.

- Reduces network latency by aligning data with account geography.

- No extra cost - AWS charges based on data volume (ingestion, storage, and scanning), not on the number of Event Data Stores.

8. Cost Analysis: Single vs. Multiple EDS

Summary:

- There is no significant cost difference between single and multiple EDS setups.

Reason:

- AWS pricing is based on:

- Data ingestion

- Data storage

- Data scanning

- The number of EDS does not affect cost.

- AWS pricing is based on: